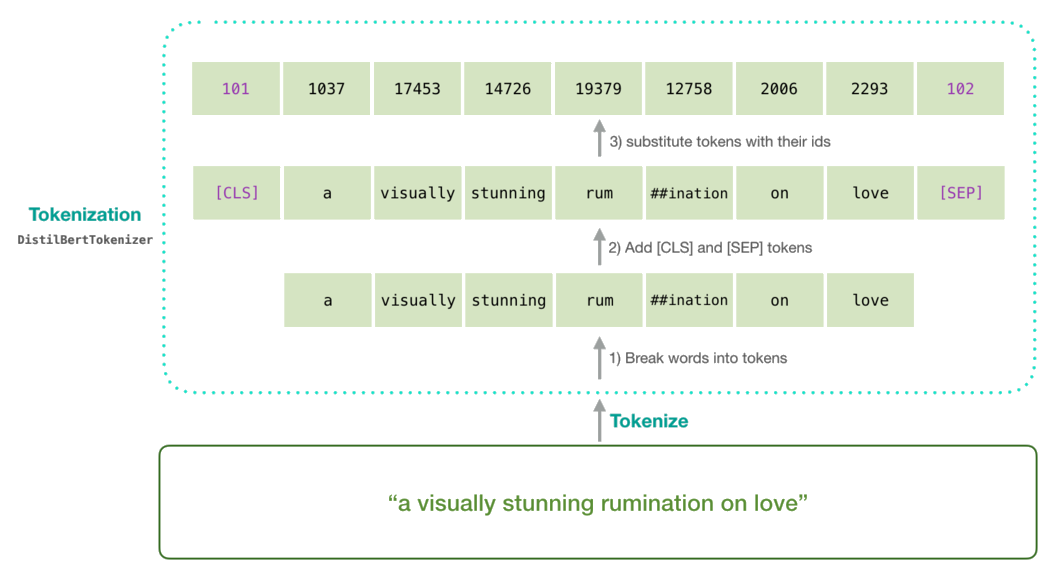

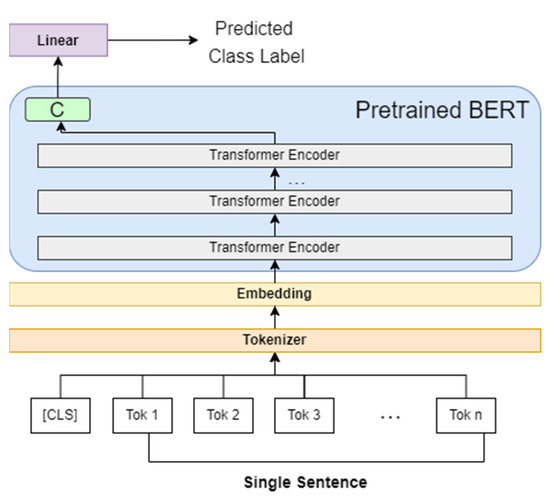

Hugging Face Transformers: Fine-tuning DistilBERT for Binary Classification Tasks | Towards Data Science

Results on sequence labeling (SL) tasks for BERT, ALBERT and ELECTRA.... | Download Scientific Diagram

Overview of ELECTRA-Base model Pretraining. Output shapes are mentioned... | Download Scientific Diagram

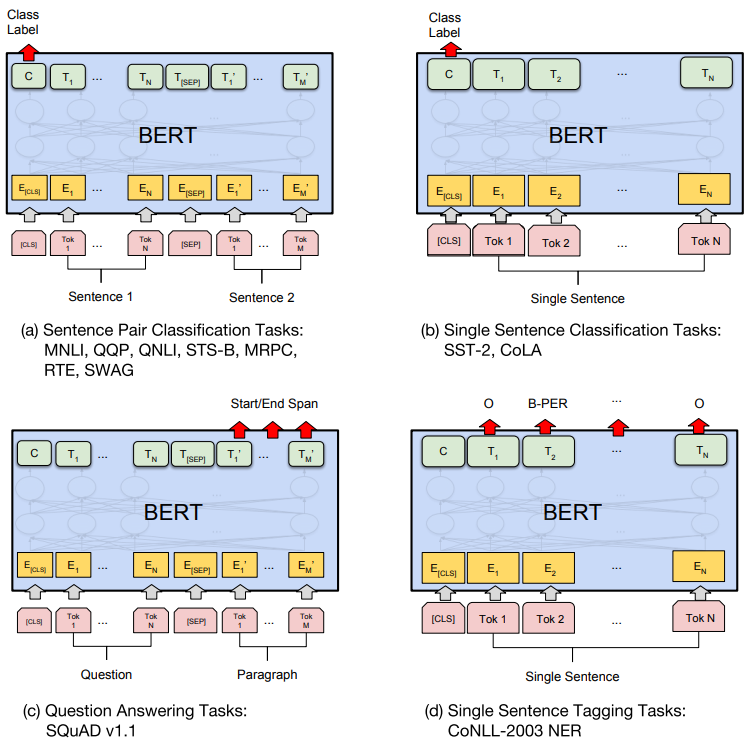

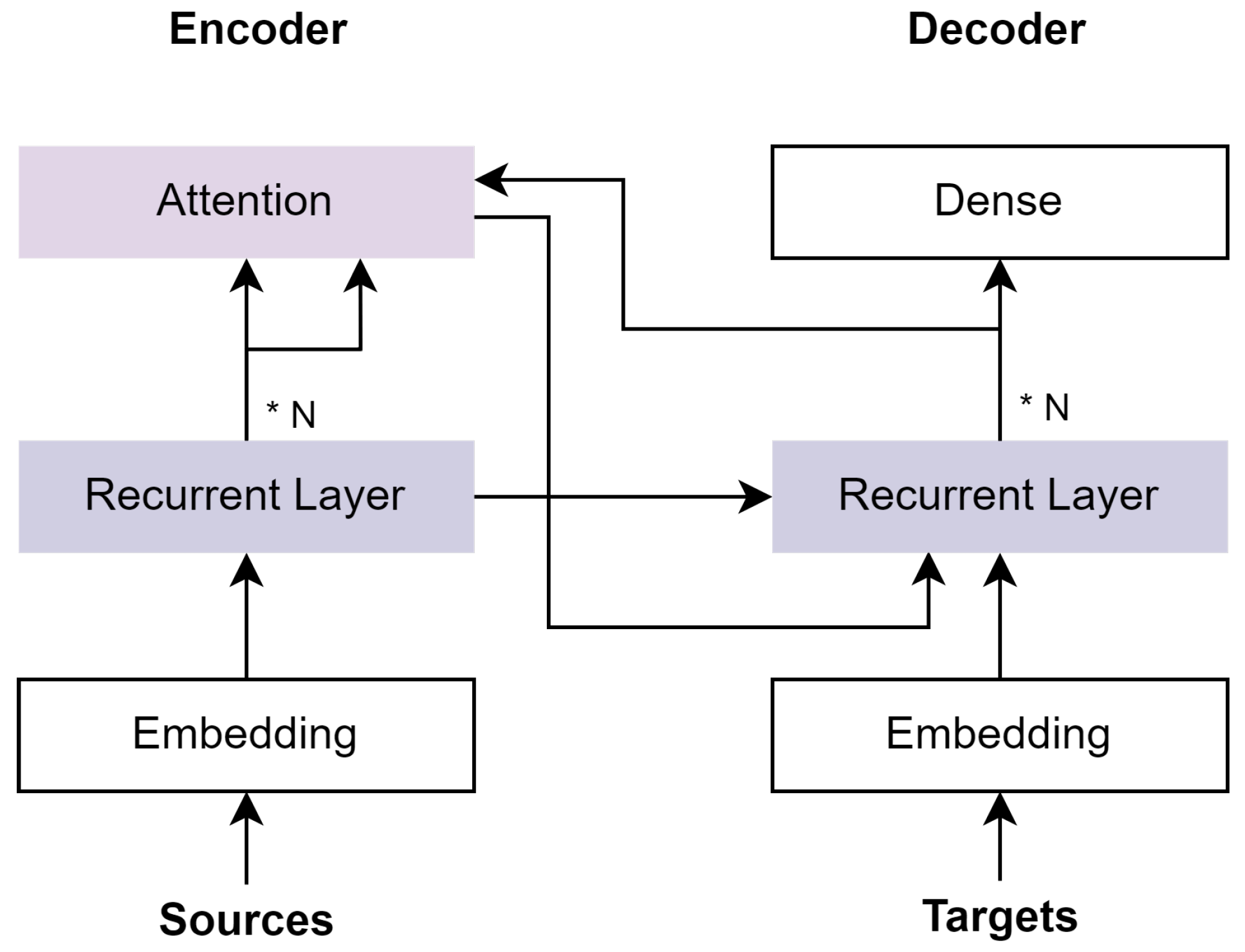

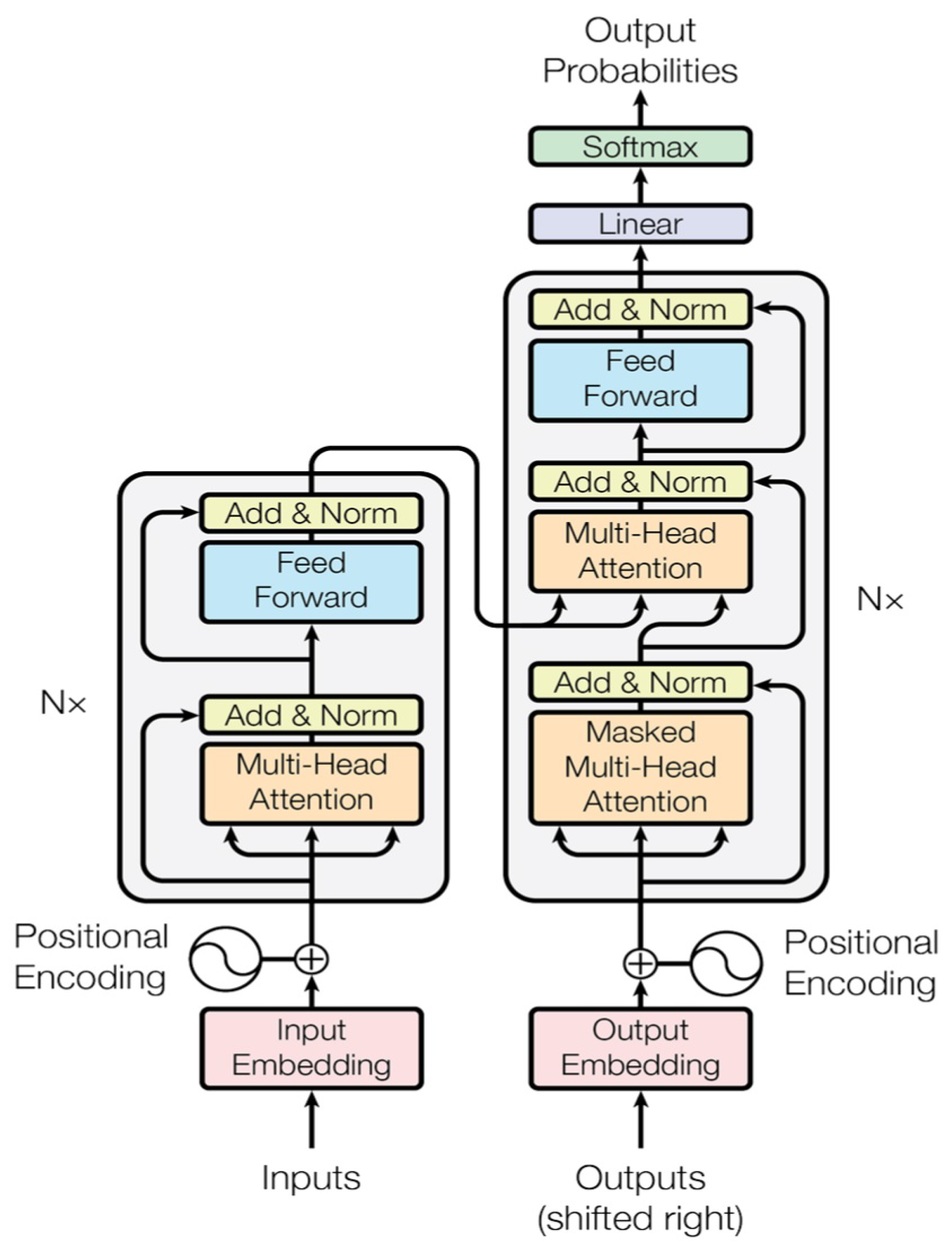

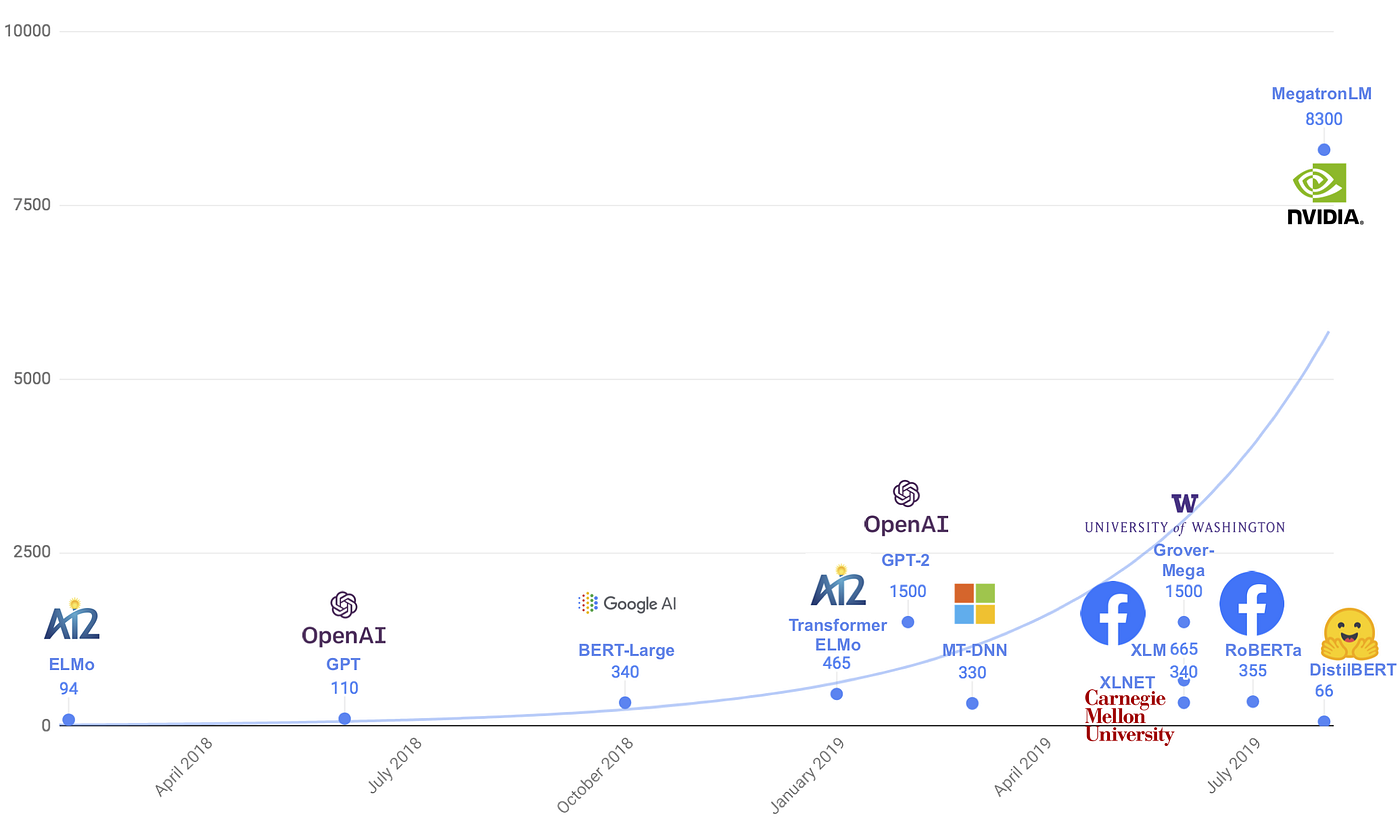

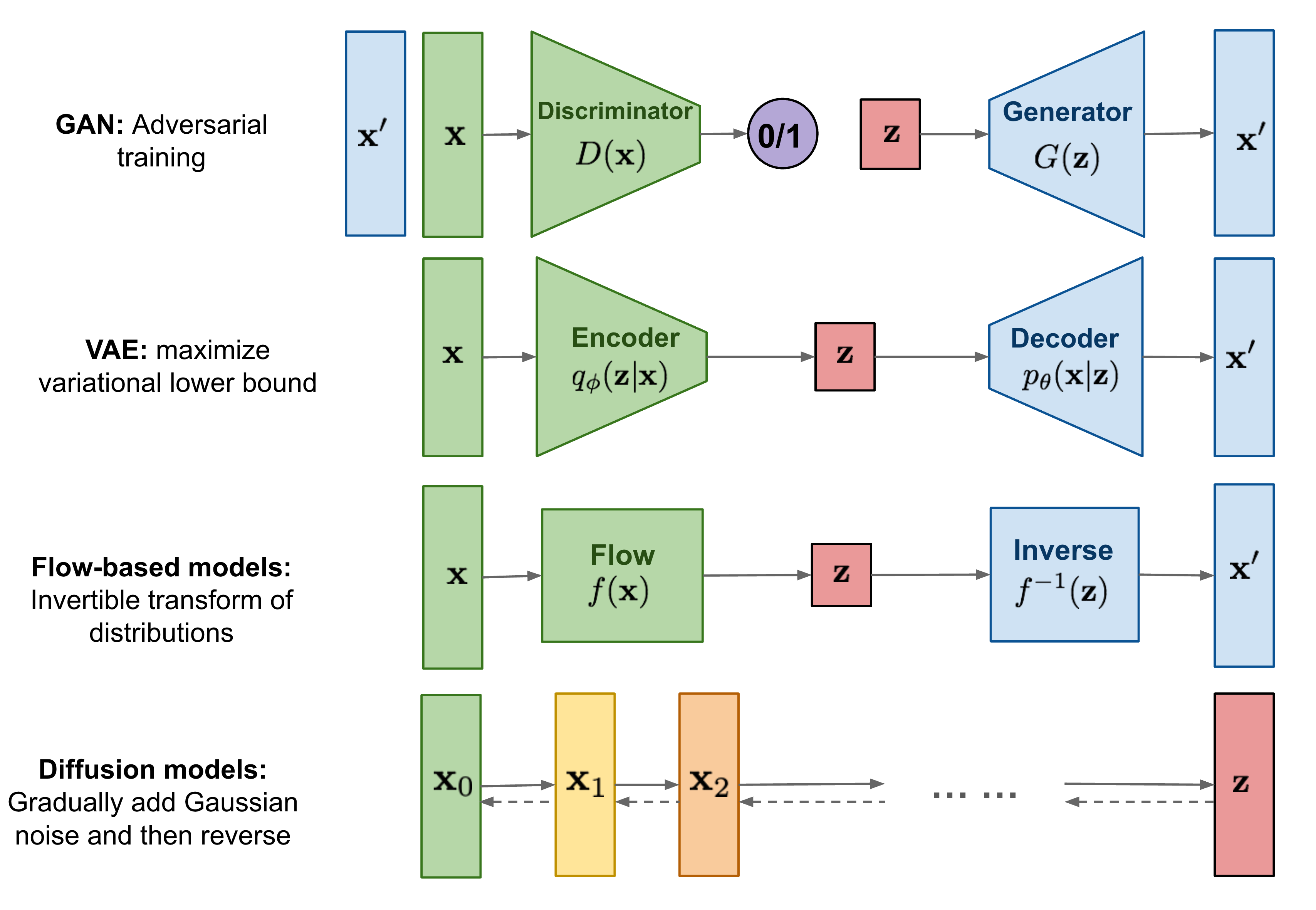

Applied Sciences | Free Full-Text | From Word Embeddings to Pre-Trained Language Models: A State-of-the-Art Walkthrough

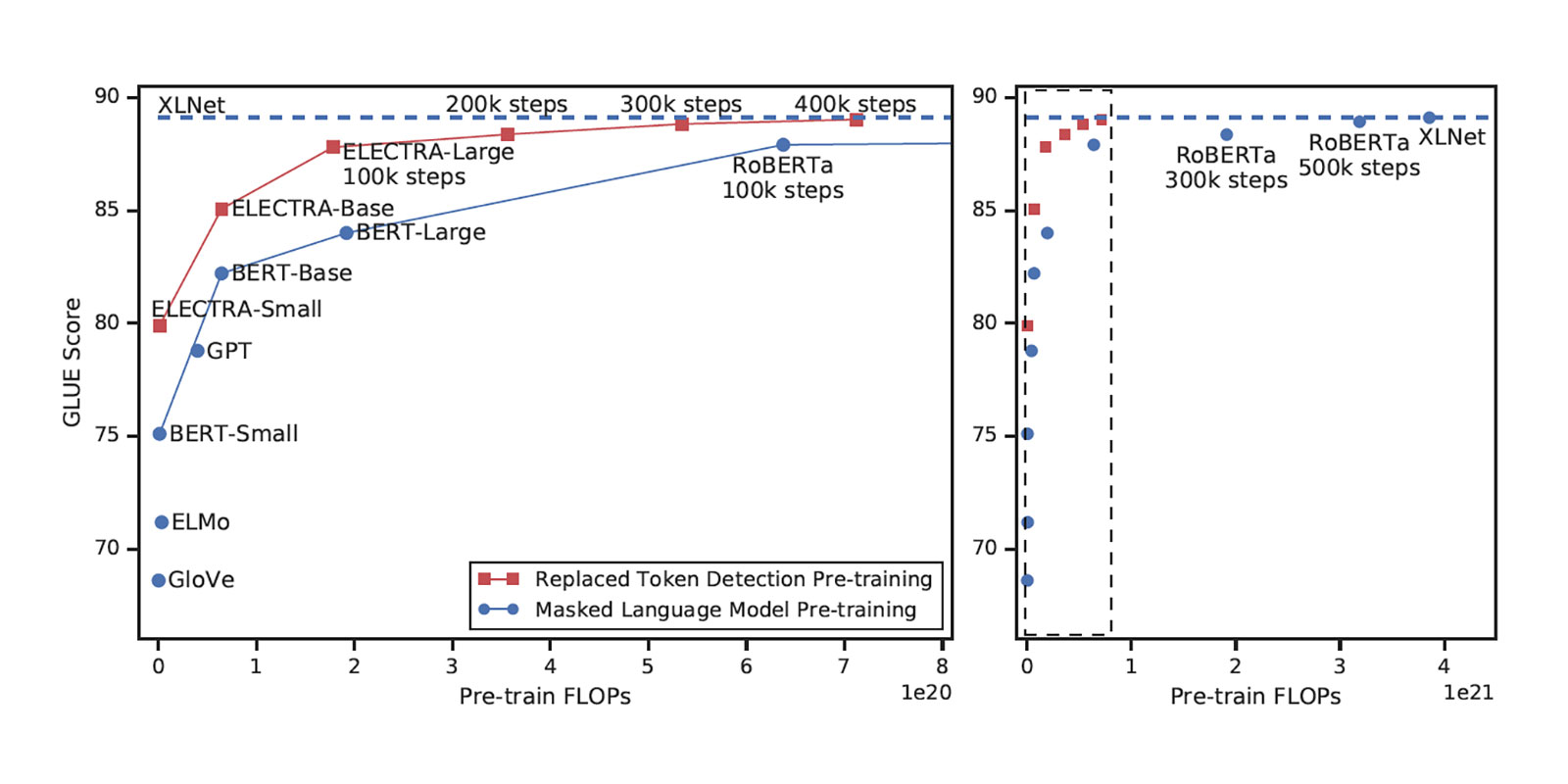

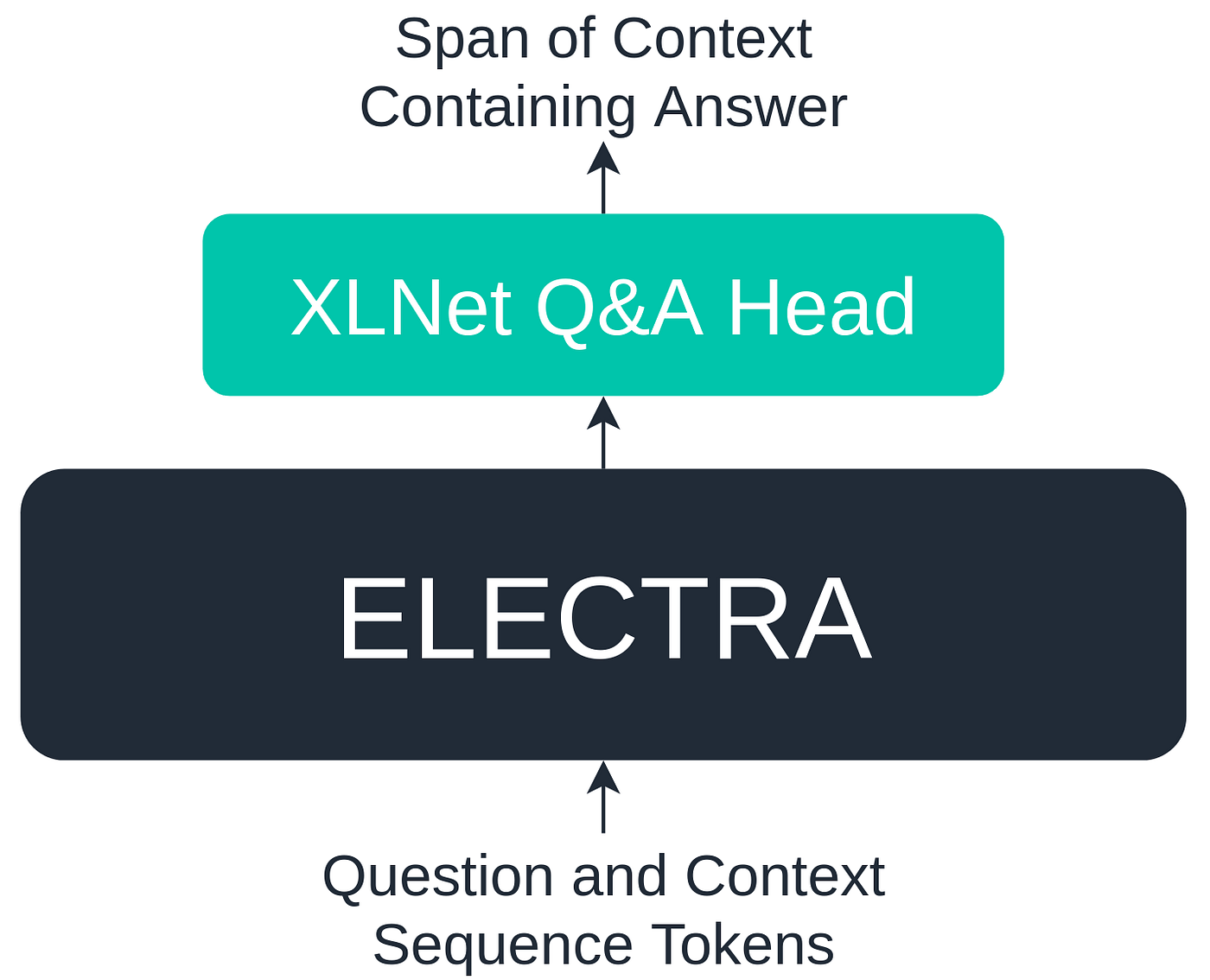

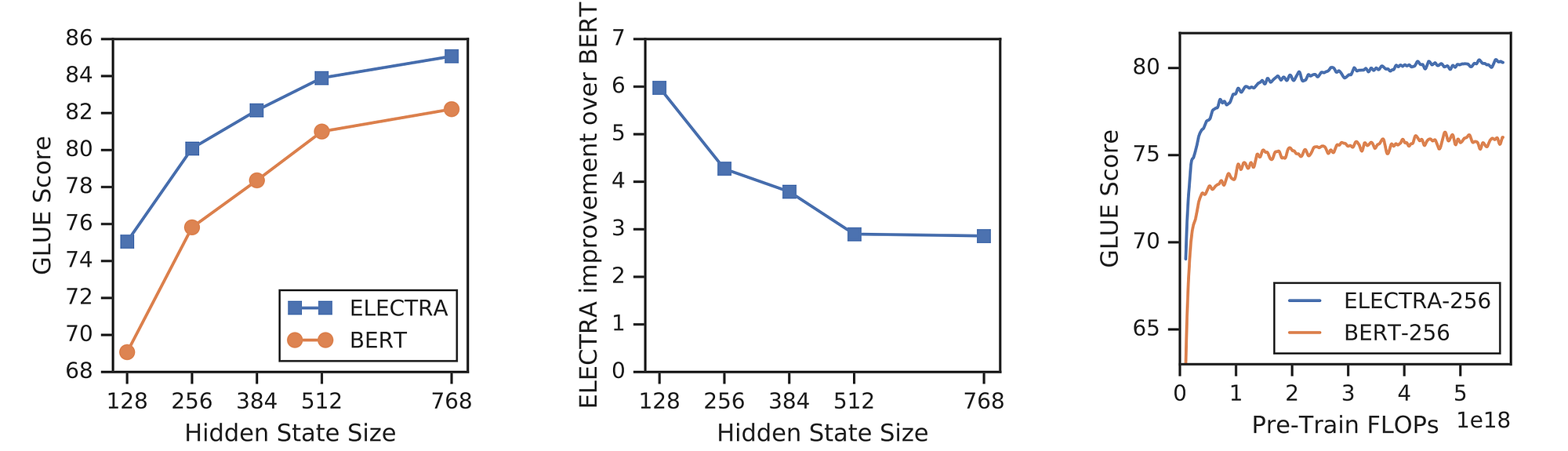

Understanding ELECTRA and Training an ELECTRA Language Model | by Thilina Rajapakse | Towards Data Science

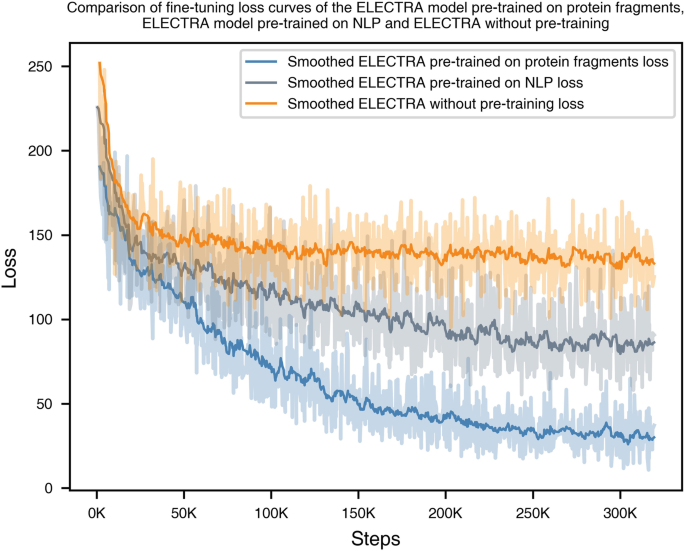

ELECTRA-DTA: a new compound-protein binding affinity prediction model based on the contextualized sequence encoding | Journal of Cheminformatics | Full Text

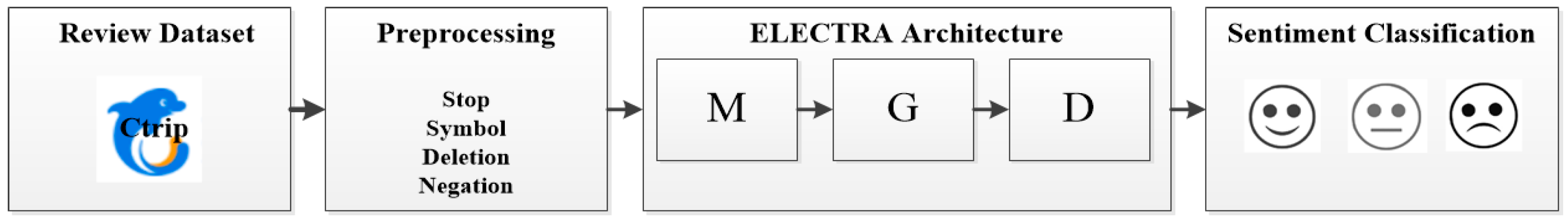

Applied Sciences | Free Full-Text | An Effective ELECTRA-Based Pipeline for Sentiment Analysis of Tourist Attraction Reviews

Applied Sciences | Free Full-Text | Comparative Study of Multiclass Text Classification in Research Proposals Using Pretrained Language Models

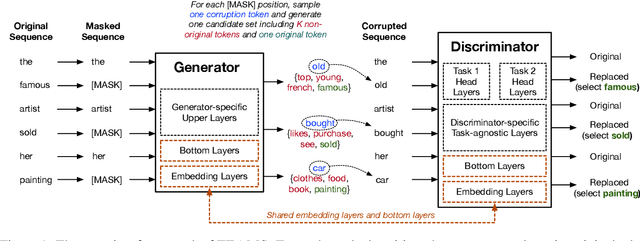

![PDF] Training ELECTRA Augmented with Multi-word Selection | Semantic Scholar PDF] Training ELECTRA Augmented with Multi-word Selection | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/27794bca3b7327aff29e2593e8b989b6a5af678b/4-Figure1-1.png)